Sarah Chen’s laptop fan had been whirring nonstop for three hours. The machine learning engineer watched her screen with growing frustration as her AI model crawled through another training cycle, the progress bar barely moving. “There has to be a better way,” she muttered, glancing at her electricity bill notification that had just popped up on her phone.

Sarah isn’t alone. Millions of developers, researchers, and businesses worldwide are hitting the same wall. The incredible AI boom has created an unexpected problem: our computers simply can’t keep up with the computational demands we’re placing on them.

But what if the solution isn’t faster chips or more powerful processors? What if it’s something as fundamental as replacing electricity with light itself?

The Speed-of-Light Solution That Could Change Everything

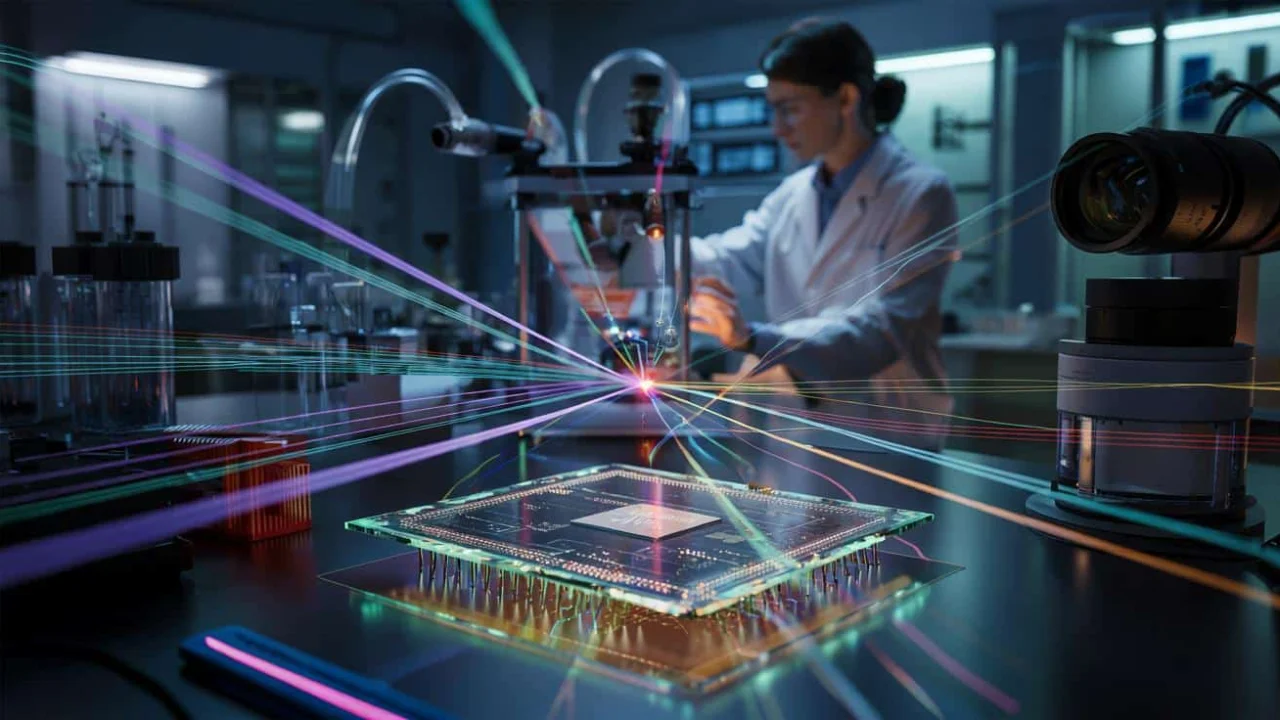

A breakthrough in AI bottleneck elimination has emerged from an unlikely collaboration between physicists and engineers. Their solution sounds like science fiction: performing AI calculations using beams of light instead of electrical circuits. The implications could be staggering.

“We’re essentially letting photons do the heavy mathematical lifting that usually exhausts our GPUs,” explains Dr. Michael Rodriguez, a computational physicist involved in optical computing research. “Light doesn’t get tired, doesn’t generate heat like electrons do, and travels at the ultimate speed limit of the universe.”

The problem they’re solving affects everyone who uses AI technology. Every time ChatGPT generates a response, every time your phone recognizes your face, and every time Netflix recommends a movie, massive mathematical operations called tensor calculations happen behind the scenes.

These calculations rely on something called matrix-matrix multiplication – imagine multiplying enormous spreadsheets of numbers together millions of times per second. Current AI systems use graphics processing units (GPUs) for this work, but even the most powerful chips are hitting physical limits.

How Light-Based Computing Actually Works

The revolutionary approach, called Parallel Optical Matrix-Matrix Multiplication (POMMM), turns light waves into data carriers. Here’s how the breakthrough technology addresses AI bottleneck elimination:

| Traditional AI Computing | Light-Based AI Computing |

|---|---|

| Uses electrical signals through silicon chips | Uses light waves through optical components |

| Generates significant heat | Produces minimal heat |

| Limited by electrical resistance | Operates at light speed |

| High power consumption | Dramatically lower energy use |

| Sequential processing limitations | Massive parallel processing |

The key innovation lies in how researchers encode information onto light beams. They manipulate both the intensity and phase of light waves – think of it like using both the brightness and the timing of the wave peaks to carry data.

“Instead of firing a laser repeatedly for each calculation, we pack multiple operations into a single burst of light,” notes Dr. Lisa Park, an optical engineering specialist. “It’s like solving dozens of math problems simultaneously instead of one at a time.”

The system works by letting light waves naturally interfere and combine as they pass through specially arranged optical components. These wave interactions physically perform the mathematical operations that AI systems need, without requiring traditional computer chips at all.

- Light beams carry encoded tensor data

- Optical components manipulate wave properties

- Wave interference performs calculations automatically

- Results are decoded from the output light patterns

- Multiple operations happen in parallel

The breakthrough addresses a critical challenge in AI bottleneck elimination: scalability. While previous optical computers worked in isolation, this new approach can potentially be networked together like current GPU clusters.

What This Means for Your Digital Life

The practical implications of successful AI bottleneck elimination through optical computing could reshape how we interact with artificial intelligence daily.

Smartphone users might experience instant AI responses instead of waiting for cloud processing. Video calls could feature real-time language translation without delays. Creative professionals could generate complex AI artwork or edit videos in seconds rather than hours.

“We’re looking at the possibility of AI assistance that feels truly instantaneous,” explains Dr. James Kim, a computer systems researcher. “Imagine asking your AI assistant a complex question and getting a thoughtful response faster than you can blink.”

The energy implications are equally significant. Current AI data centers consume massive amounts of electricity – ChatGPT alone uses as much power as a small city. Light-based computing could reduce these energy requirements by orders of magnitude.

For businesses, this AI bottleneck elimination could enable new possibilities:

- Real-time fraud detection in financial transactions

- Instant medical image analysis in hospitals

- Immediate traffic optimization in smart cities

- Live language translation in international meetings

- Instantaneous product recommendations in e-commerce

The technology could also democratize AI development. Currently, training large AI models requires expensive GPU farms that only major tech companies can afford. Optical computing might make powerful AI development accessible to smaller organizations and individual researchers.

However, challenges remain. The optical systems require precise alignment and controlled environments. Manufacturing costs are currently high, and integrating optical components with existing digital infrastructure presents engineering hurdles.

“We’re still in the prototype phase,” admits Dr. Rodriguez. “But the fundamental physics works beautifully. It’s now a matter of engineering optimization and cost reduction.”

The research, published in Nature Photonics, represents years of work combining quantum optics, computer engineering, and AI system design. The team successfully demonstrated their approach using standard optical components, suggesting that manufacturing could scale up relatively quickly.

Industry experts predict that commercial applications could emerge within five to ten years, initially in specialized data centers before expanding to consumer devices.

As AI systems continue growing more sophisticated, traditional computing approaches face increasingly severe limitations. This breakthrough in AI bottleneck elimination using light-speed processing might be exactly what’s needed to keep pace with our technological ambitions.

The next time your computer fan starts whirring during an AI task, remember that the solution might literally be as fast as light itself.

FAQs

How fast is light-based AI computing compared to current systems?

Light-based systems can potentially process AI calculations at the fundamental speed limit of the universe, offering massive improvements over electrical circuits that face resistance and heat limitations.

Will this technology replace all computer chips?

Not entirely. Light-based computing excels at specific AI calculations like matrix multiplication, but traditional chips will still be needed for many other computing tasks and system control.

When will consumers see this technology in their devices?

Experts estimate commercial applications could begin appearing in 5-10 years, starting with data centers before reaching consumer electronics like smartphones and laptops.

How much energy could this save?

Early research suggests light-based AI computing could reduce energy consumption by orders of magnitude compared to current GPU-based systems, potentially cutting data center power usage dramatically.

Are there any downsides to optical computing?

Current challenges include high manufacturing costs, precise alignment requirements, and integration difficulties with existing digital infrastructure, though these are expected to improve with development.

Could this make AI development more accessible?

Yes, if optical computing reduces the cost and energy requirements of AI training, it could enable smaller organizations and individual researchers to develop powerful AI systems currently only accessible to major tech companies.